Instagram has launched new protection measures to give teens more age-appropriate experiences on their platform and other Meta platforms. While announcing on his broadcast channel, Instagram CEO Adam Mosseri stated that they are starting to hide more types of content that experts said may not be appropriate for young people, including topics related to suicide, self-injury, and drugs.

To echo this, Instagram released a full statement on their announcements blog site stating that they want teens to have safe, age-appropriate experiences on their apps. To achieve this, they have developed more than 30 tools and resources to support teens and their parents, and they have spent over a decade developing policies and technology to address content that breaks their rules or could be seen as sensitive.

Now you can download Instagram reels.

New Content Policies for Teens.

Over the years, Meta has regularly been in consultation with experts in adolescent development, psychology, and mental health to help make their platforms safe and age-appropriate for young people, including improving their understanding of which types of content may be less appropriate for teens.

Now, they will start to remove sensitive and inappropriate types of content from teens’ experiences on Instagram and Facebook, as well as other types of age-inappropriate content. We already aim not to recommend this type of content to teens in places like Reels and Explore, and with these changes, we’ll no longer show it to teens in Feed and Stories, even if it’s shared by someone they follow.

Instagram testing a feature for users to disable read receipts

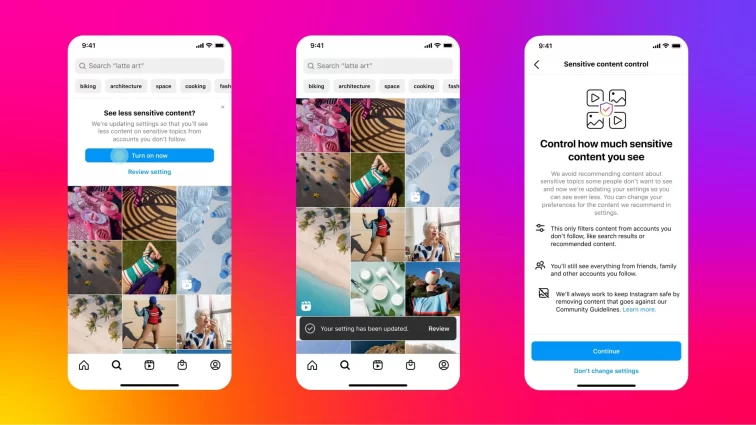

Updates to Instagram’s and Facebook’s Content Recommendation Settings for Teens.

Meta is automatically placing teens into the most restrictive content control setting on Instagram and Facebook. This setting is already applying to new teens when they join Instagram and Facebook and is now being expanded to teens who are already using these apps. The content recommendation controls — known as “Sensitive Content Control” on Instagram and “Reduce” on Facebook -– make it more difficult for people to come across potentially sensitive content or accounts in places like Search and Explore.

Soon you can have two WhatsApp accounts.

Hiding More Results in Instagram Search Related to Suicide, Self-Harm and Eating Disorders.

As much as Meta allows people to share content discussing their own struggles with suicide, self-harm, and eating disorders, their policy is not to recommend this content and they have been focused on ways to make it harder to find. Now, when people search for terms related to suicide, self-harm, and eating disorders, Meta will start hiding these related results and will direct them to expert resources for help. Meta already is hiding results for suicide and self-harm search terms that inherently break their rules and now they are extending this protection to include more terms. This update will roll out for everyone over the coming weeks.

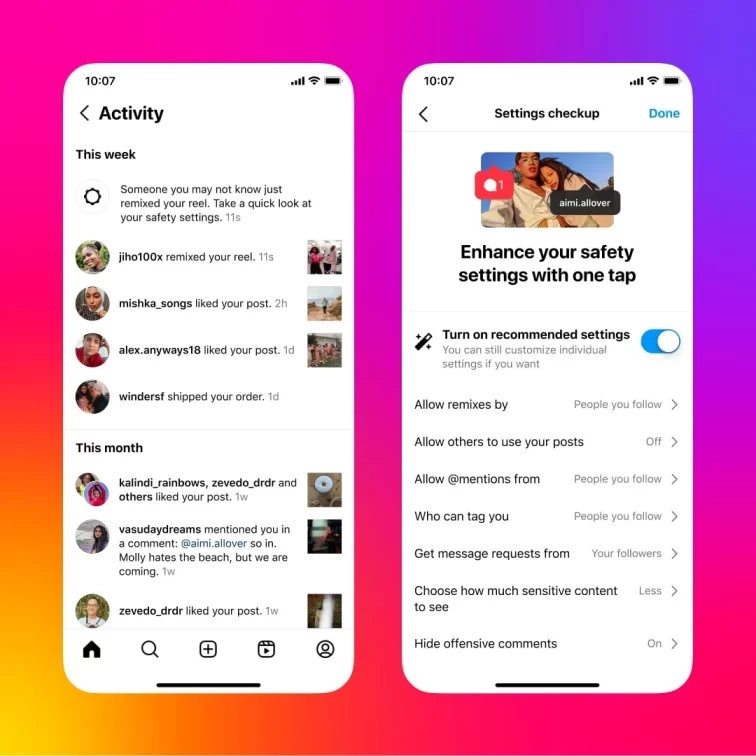

Prompting Teens to Easily Update Their Privacy Settings

To help make sure teens are regularly checking their safety and privacy settings on Instagram, and are aware of the more private settings available, Meta will be sending new notifications encouraging them to update their settings to a more private experience with a single tap. If teens choose to “Turn on recommended settings”, Meta will automatically change their settings to restrict who can repost their content, tag or mention them, or include their content in Reels Remixes. They also will ensure only their followers can message them and help hide offensive comments.